The exact definition and delimitation of DevOps is a very difficult question, even for experts who have devoted half of their professional career to the field. In this short article, we will attempt to at least briefly explain what the role entails and on the other hand what it usually avoids. The main focus here lies on developers, system administrators and implementation managers (i.e., the so-called operations area).

In the early days of technological development, a project team making applications consisted of developers analysts, testers, system administrators, as well as network and hardware specialists. And half of the possible obstacles to success could be avoided by just having a coordinated team. Simply put: The people responsible for development created an application (the developers) and handed it over to system administrators, who then implemented it (possibly using automated tools) on hardware in the server room.

In the early days of technological development, a project team making applications consisted of developers analysts, testers, system administrators, as well as network and hardware specialists. And half of the possible obstacles to success could be avoided by just having a coordinated team. Simply put: The people responsible for development created an application (the developers) and handed it over to system administrators, who then implemented it (possibly using automated tools) on hardware in the server room.

Then, not so long ago, we saw the rise of so-called agile development. This rapidly sped up the whole process, and the communication between developers and operations became increasingly complex. At that point, minor problems could lead to a product (or its update) not being delivered to a client who is anxiously waiting for it. Or, the delivered product (or its update) could have major issues. The reason for this was, and often remains, communication between various parts of the team.

As we said, there’s developers and then there’s operations. These two “camps” do strive to communicate with each other, but in practice it’s very complicated. Each of them speaks, in some sense, a different language or at least a different dialect. What might be simple from the perspective of development might not be implementable on the servers, where one needs to take the infrastructure into account. At the same time, things which are easy to handle on the infrastructure side could be a difficult nut to crack for the developers.

What would happen if we were to take a developer and sent them to study operations? Or, from the other side, if we were to take someone from operations and sent them to scout out what’s happening in development? That is how we get someone who could call themselves a DevOps specialist. But for them to “earn” the title, they need to understand more than just what the product is made of and where it’s implemented. They need to, first and foremost, change their mindset.

Here we’re referring to a whole range of procedures which automate and standardize the processes between the development of software and operations, so that it is possible to build, test and release SW more reliably and quickly.

New Mindset + New Tools + New Skills = DevOps

You build it, you run it!

The basic idea is that DevOps isn’t just about technology – it’s a whole development paradigm. To make sure that a company works as it should, one needs to change not only the applications they use but also the whole approach to development, testing and implementation into production; in fact, the way we think about this general process should change.

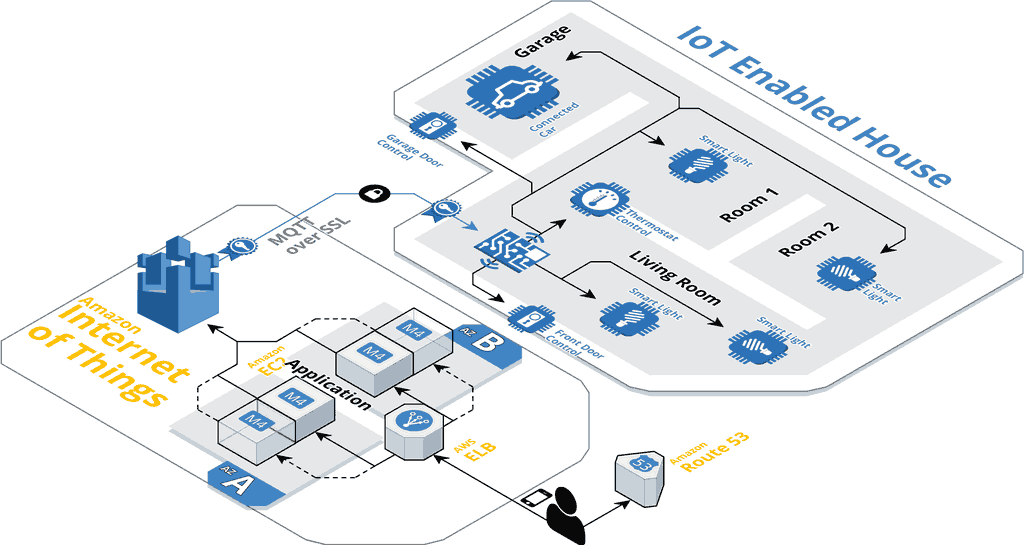

It used to be an utopian dream, but today it is in fact possible to rent whole clusters, including administration and connections to various services such as databases (e.g., PostgreSQL, MySQL and CockroachDB), queues (e.g., Kafka and RabbitMQ), analytical systems (Hadoop), logging and monitoring infrastructure (Elasticsearch, Kibana, Grafana) as well as various IoT services and REST API. And how else to speed up the whole process from the creation of an application to its implementation than by knowing how to run these applications on your own.

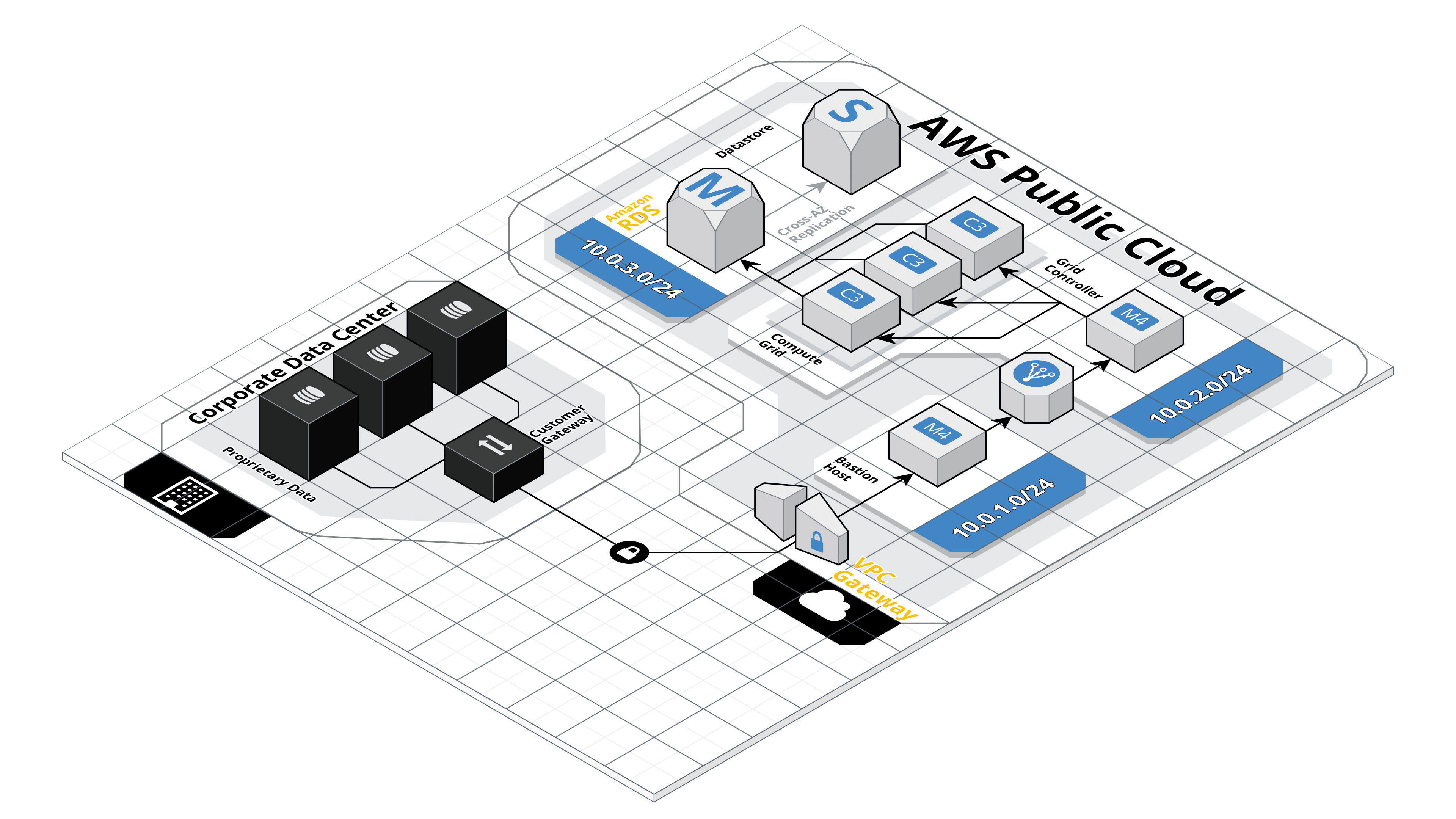

Hybrid Cloud Architecture

Virtual Private Cloud

If a company is operating an application, the trend nowadays is to use the cloud rather than rely on one’s own on-premise infrastructure. Today, cloud infrastructure can be optimized for high availability, low latency, and it is even possible to set it up in a way where for instance customers fro the Czech Republic will use a data cloud in Germany whole French customers will use one in France. Modern clouds meet high security standards, and another advantage of them is that it is possible to make use of a range of technologies associated with their operation as a service model. In practice this means that companies don’t need to employ their own specialists who would be responsible for infrastructure including its maintenance and installation, since they get all of that as a service. They then operate their applications on this infrastructure in the form of so-called microservices. This leads to savings in terms of both manpower (which is at a premium nowadays) as well as costs. So, it is important to make sure new applications are developed as cloud-native. The most frequently used clouds are Azure, AWS and Google Cloud.

Internet of Things Architecture

Microservice architecture

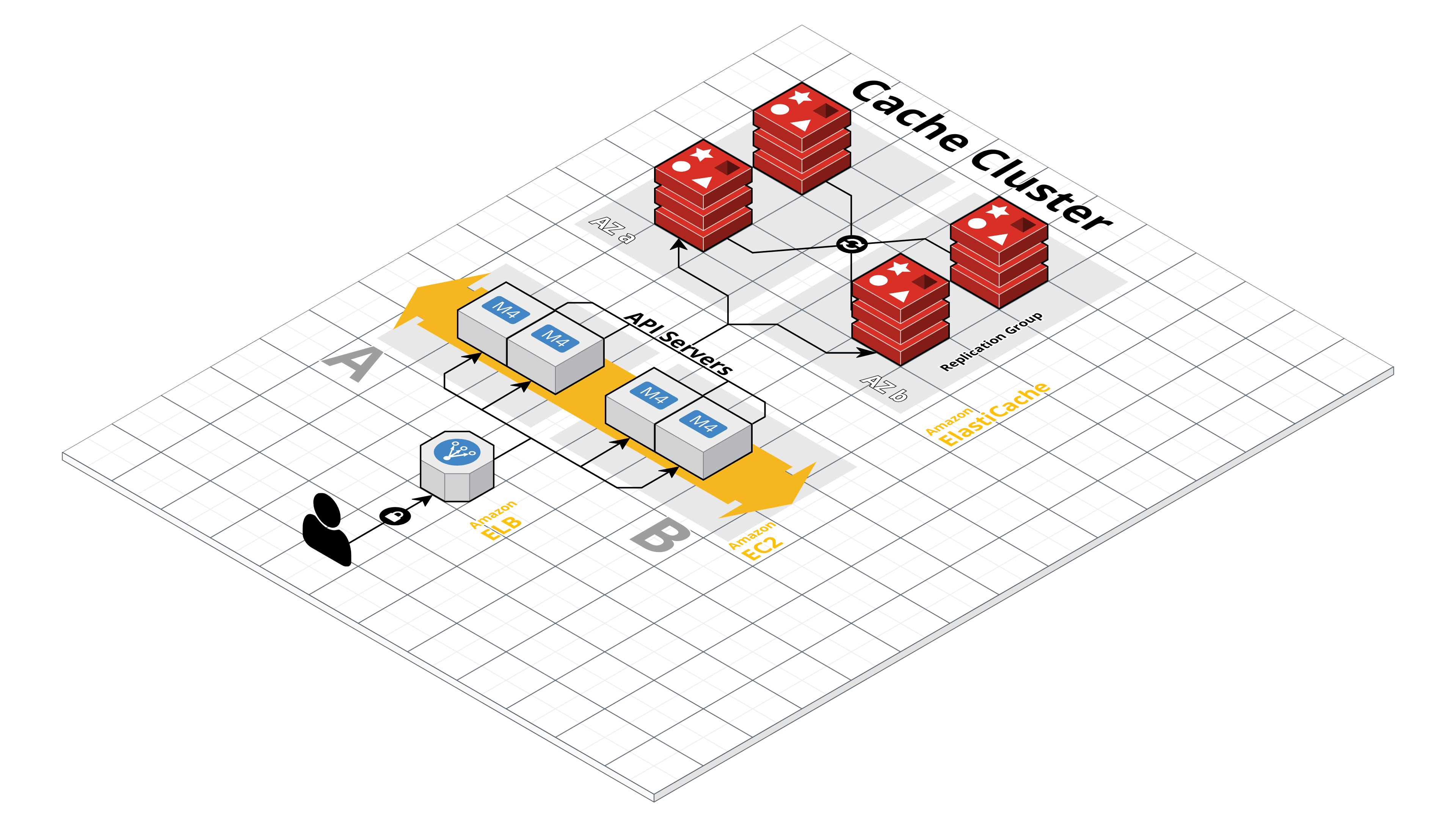

Most applications used to be developed as monolithic programs. However, today applications commonly consist of smaller parts which communicate with each other through a single interface. The advantage of this? Monolithic applications might in some cases need, say, fifteen minutes to start up, while smaller apps only take dozens of seconds. For microservice architectures, we always strive to have them implemented in Platform as a Service or Software as a Service modes.

One popular methodology in this area is the Twelve-Factor App, which is essentially a set of rules that make development significantly easier to track and manage as long as the whole team follows them. It describes how to handle code, where to store configurations, what to do with backups, builds, how to deal with scaling, logs and administration.

Caching Cluster Architecture

Serverless architecture

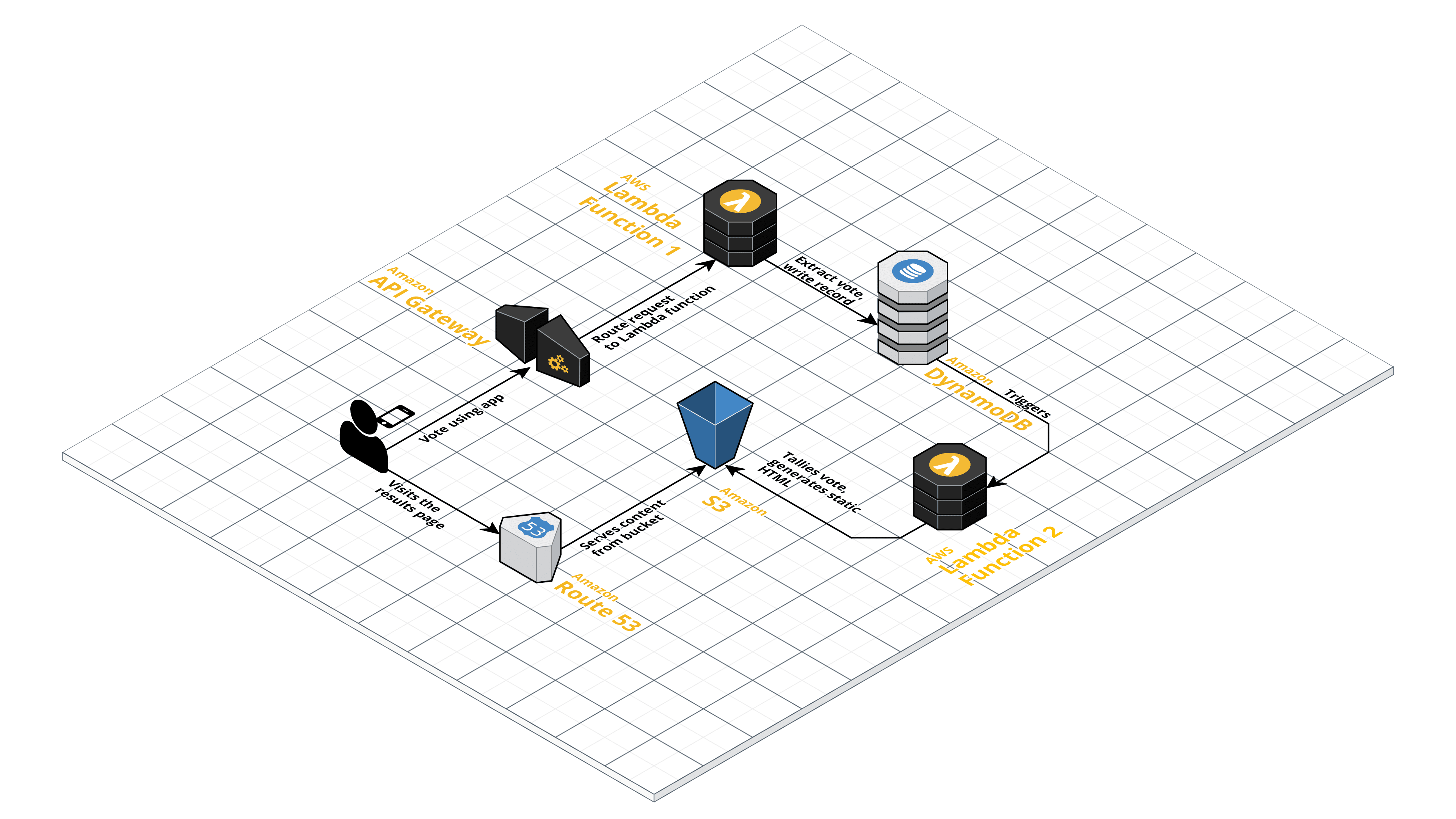

Another highly interesting foundation of the architecture of modern applications is to have them operate as serverless. Basically, one takes part of the code from the aforementioned smaller applications – code which could be more resource-intensive or might not be required to run continuously – runs it through an interface provided either by AWS (AWS Lambda) or Azure (Azure Functions), these then start small subprocesses, compute the results and return them back to the services. Scalability can also be applied on the level of functions which can be run in parallel and independently of each other.

Serverless Application Architecture

Automation

There’s another characteristic of DevOps that we didn’t mention so far: laziness. DevOps strive to maximally simplify their life through automation. And automation is the alpha and omega of today’s DevOps development. We automate implementations, work processes, testing, infrastructure, even the management and revision of user rights and accesses... essentially everything. When to start with automation? As soon as an activity needs to be carried out more than once.

Automated testing of code

In order to expedite development while making sure that we didn’t break anything anywhere, we need to have everything covered by tests prepared by the developers themselves. Taken ad absurdum, this idea implies that first one should prepare a test and only then the function. After all, Test Driven Development is by now an established notion within software development. And writing tests on your own instead of waiting for testers is part of the aforementioned DevOps mindset.

In the world of Java, we do that using JUnit, Mockito, MockMvc, Selenium, Sonar etc. So there’s plenty of tools, but the thing that’s sometimes lacking is the willingness of developers to spend time on this.

Automated workflows

Workflow automation is done using tools such as Jenkins (CI/CD), GitLab, Container Registry, Jira. In practice, this means that a developer puts their code in GitLab, the automated pipeline runs unit tests on that, compiles the program and implements it on the server environment, where it is then continuously monitored. Ideally, everything really runs on its own.

Automated infrastructure: Infrastructure as code!

An ideal end result is to have everything always run the same on all environments, and to be able to create these environments with just a single click. Nobody ever needs to install operating systems; everything should be scripted using templates. In order to create infrastructure as code, we first need to shield the application from the hardware. This is done by applications such as Docker and Podman. We take a created application and implement it in some ecosystem – most frequently Kubernetes or OpenShift. Everything can also run on-premise, but that’s not really what DevOps is about. Both Kubernetes and OpenShift can be launched in just a few clicks. Kubernetes is hosted by all larger providers (AWS EKS, Azure AKS, and Google GKE).

We have several options for the infrastructure. We can “generate” an infrastructure from the comfort of a web browser or, as the preferred option, create a template that will let the provider create the infrastructure directly through an API layer.

The most frequently used universal template software is Terraform. It has connections to all larger providers, but it also possible to use on-premise servers. It is easier and often better to write these templates in native scripts (for AWS these are, for instance, CloudFormation in YAML a JSON, or newly AWS CDK, where it is possible to describe the infrastructure for instance in JavaScript, JAVA or Python). This allows us to maximize the provider’s capabilities. The template can then be launched, and can even be used to create an identical environment multiple times (which is good for various dev/test environments). The applications themselves can be delivered into the environments using all the usual tools by Jenkins, Gitlab, Bitbucket.

Measurements

Our application is now in production, but that’s not the end of it. We need to start evaluating and analyzing it, debugging, and to do so we need continuous metrics and analytical tools. ELK Stack is a bundle of tools that can help us collect logs and visualize them. Kibana is a tool that allows us to browse through the logs in a visualized form all in one place; this is great for determining an application’s performance as well as identifying the cause of problems. Aside from error filtering, it is also capable of displaying CPU metrics etc.

Methodology

While the waterfall approach that rose to prominence in past years and was frequently used then does allow for careful development, it is not as great when it comes to speed and agility. That is why agile methodologies have become so popular nowadays; these allow us to split development into small chunks, which can then be handled independently. if you think about it, that’s basically the foundation for the whole DevOps philosophy – from infrastructure up to methodology and vice-versa. This means we do daily stand-ups and development takes place in short sprints. The standardization of the whole development process is important, and this covers analysis, development, testing, implementation as well as monitoring the performance of the completed application.

Conclusion

The success of a DevOps project requires a combination of expertise from various areas, high-quality technologies, know-how from the field, but first and foremost a change of how a team works and how developers think. But once all of that is done, the results are worth it. A well-setup project allows for faster innovation, can quickly react to business developments and requirements, teamwork is more efficient, the overall code quality is better and we get more frequent releases.